buarki

FollowSite Reliability Engineer, Software Engineer, coffee addicted, traveler

MLOps in practice: building and deploying a machine learning app

January 10, 2024

5659 views

Share it

Foreword

This one aims to provide a friendly introduction to Machine Learning Ops (MLOps) in practice by describing how a simple App able to perform simple mathematical operations using images of single digit numbers was made. It has no intentions at all to be a replacement of any deeper study of the topic, but just a simple hands-on example.

Context

Talking to some colleagues and friends lately gathering some ideas of a nice Machine Learning project to build, I've seen that there's a gap of knowledge in terms of how do one exactly uses a Machine Learning model trained? Just imagine yourself building a model to solve some problem, you are probably using Jupyter Notebook to perform some data clean up, perform some normalization and further tests. Then you finally achieve an acceptable accuracy and decides that the model is ready. How will that model end up being used by some API or worker to perform some inference that will be used elsewhere in the company you work or by any system?

Such question is addressed by the ones involved with Machine Learning Ops (MLOps), which is a series of practices and policies that takes care of making trained model available to be used somehow, pretty similar to how we export a software lib, a docker image tag etc.

To provide a concrete, simple, and effective example, this article will go through the very process of planning one app that requires Machine Learning. We'll pass through the planning and building the needed model, saving the model, checking how it can be exported and how it can be used on an application software.

All this process was applied on an open project called snapmath, and the very source code is available on my Github and you can also use it as it is deployed here. And in case you would like to know the whole design and planning process check the project design document.

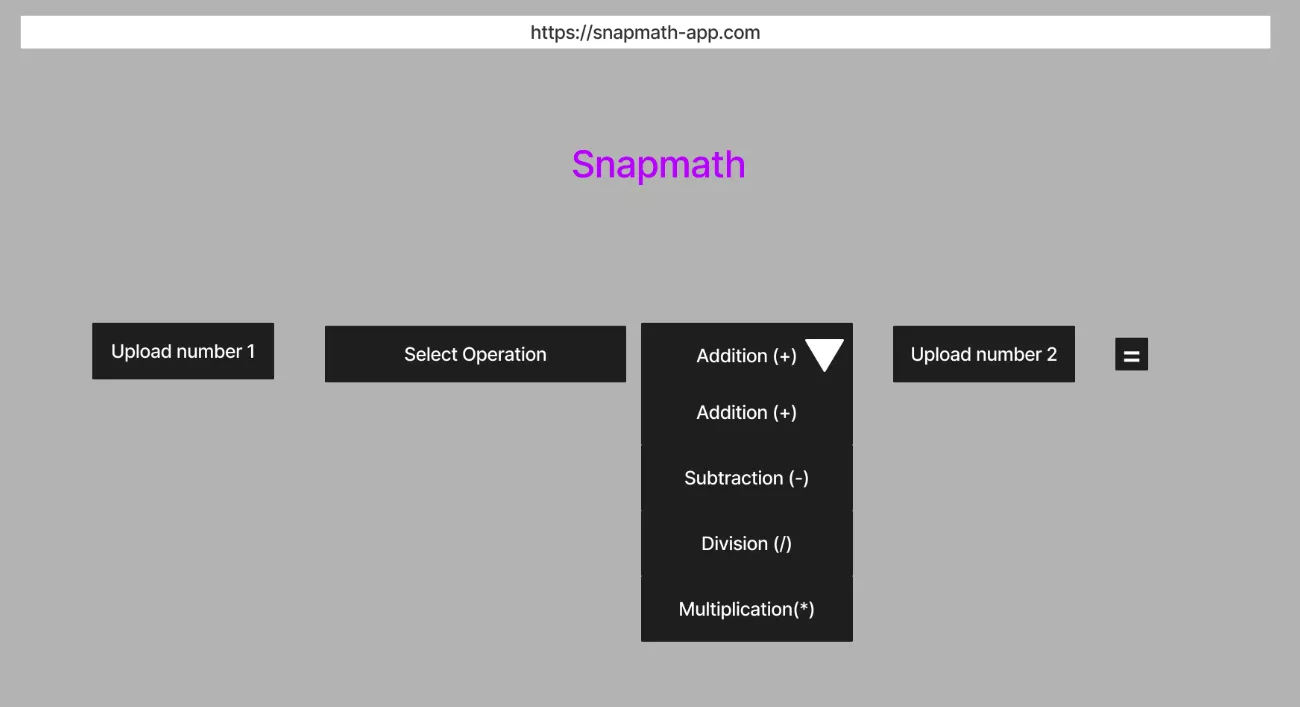

The Problem to be solved

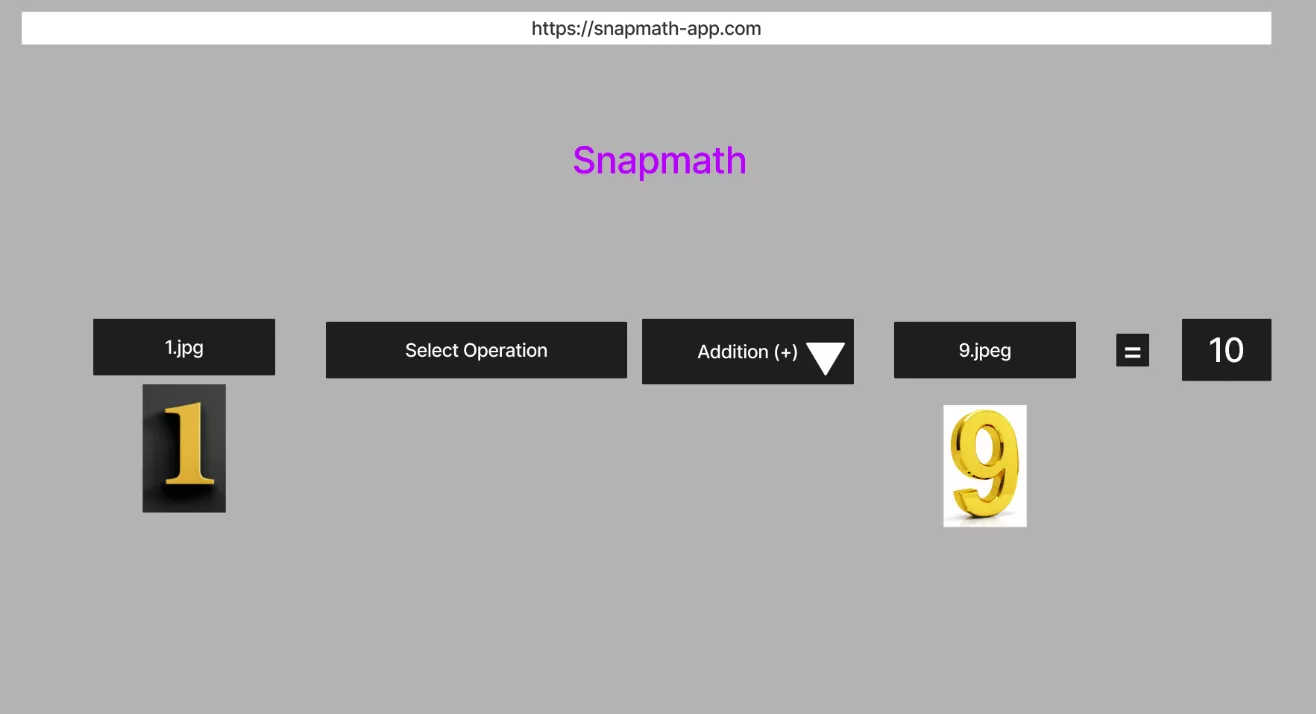

We want to create a simple calculator of single-digit numbers. Such app will receive the two numbers to be used as images besides the operation to be performed: +, -, / and *. An overview of how the app was planned to look like in the beginning can be seen on the images below (and please don't envy my prototyping skills):

And once user selects the operation to run and input the two images:

How was the problem solved?

With problem stated the main questions that may arise are: how can one properly build the model? Is there a fancy tool available to do so or it should be done on an ad hoc way? Once the model is trained, how is it exported? as a JSON?

The tool used to build the model per se was TensorFlow, a very powerful and end-to-end open source platform for machine learning with a rich ecosystem of tools. And in order to to create the needed script using TensorFlow Jupyter Notebook was used, which is a web-based interactive computing platform.

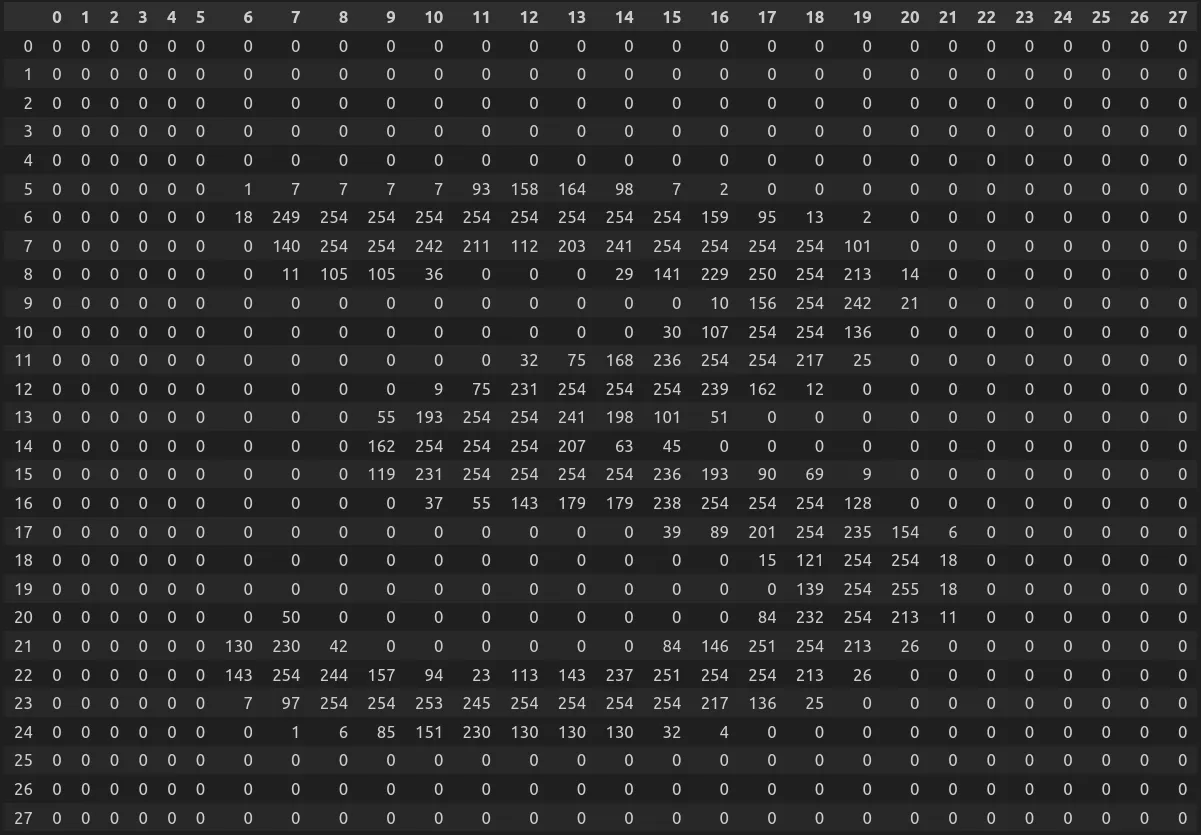

In machine learning projects, the most important part is data. To build a model to solve such a problem we need a dataset that provides some images of digits with the corresponding digit. A nice and available one is MNIST. This dataset provides the images with shape of 28 pixels width x 28 pixels height in grayscale besides its corresponding digit. Such data is provided in form of a CSV file where each line has 729 columns, 728 to represent the pixels (28 x 28) and 1 to represent the expected digit.

With model built and trained, the next step was loading it into one React app to run the inference of a given image. To load the model we needed to "translate" the trained TensorFlow model on Jupyter Notebook to a form that the TensorFlow.js is able to understand. To do so we can use tfjs-converter, which is is an open source library to load a pre-trained TensorFlow SavedModel, Frozen Model or Session Bundle into the browser and run inference through TensorFlow.js.

It sounds like a lot of things to do, but calm down, let's highlight the goals, non-goals and limitations before proceeding :)

Goals of such project

The goals of this project were straightforward: (1) understand the process of building a model from scratch using TensorFlow;(2) get acquainted with Jupyter notebook as it is a widely used tool in by the industry;(3) get acquainted with strategies to deploy Machine Learning models once trained, such as TensorFlow Serving.

Non-goals of such project

With this project we are not intended to create production-ready model with high accuracy. The main focus here is having a model with a reasonable accuracy and understand the process from creation to deploy of a Machine Learning model.

The limitations of this project

As MNIST dataset was used to train our model, the images to be inserted into the model must be in the shape of 28 pixels width by 28 pixels height and also be in grayscale. It's reasonable thinking that the majority of people won't have an image with such traits, thus we'll need to preprocess it to then input into the model. Due that preprocessing, the image quality might be degraded, leading to bigger possibilities of errors. Thus, it's recommended to use small images only.

Building the model with Convolutional Neural Networks

Due to the fact that numbers can be drawn in different ways, one relevant aspect to plan the Neural Network is the translation invariance, because the app may be fed with a plenty of different forms of number 1s, thus, different forms of invariances, like size, perspective and lighting. Below images might give an example of this.

To effectively extract features that enable the identification of a "1" regardless of these variations, we employ convolutional neural networks (CNNs). The convolutional layers in CNNs are adept at scanning local regions of the input images, enabling the network to learn hierarchical features. This is particularly valuable for capturing essential patterns despite variations in appearance.

And to further make this feature extraction consistent, we can combine the convolutions with pooling as it helps in creating a more abstract representation of the input, making the model less sensitive to the exact spatial location of features. This means that even if the position, size, or lighting conditions of a drawn "1" differ, the CNN's feature extraction mechanism remains consistent, facilitating accurate identification.

Thus, the first part of the Neural Network will be in charge of detecting and collecting the features from the images and placing them on a vector. Such vector then will be fed into a regular fully connected Neural Network to perform the learning.

Once the features that makes a "1" be a "1" are found we can pass it into a fully connected Neural Network and perform the training adjusting the weights until it starts giving accurate predictions of which number the image is corresponding.

The full process of building such model in baby steps can be seen on this Jupyter Notebook file available on my Github.

Exporting the model

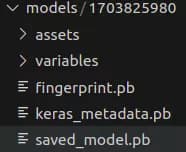

Once the model is properly trained, we can export in variety of formats. So far, I've only used the format tf_saved_model, and it seems to be the recommended way for now. Once we export it, TensorFlow creates a directory similar to below one:

It's also worth pointing that the model should be named using a timestamp for better version control. For above case the name is 1703825980.

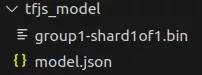

Translating the model to TensorFlow.js

As said before, in order to use the built model using TensorFlow.js we should first "translate" it to a compatible format. To do so we used tfjs-converter. By converting the model in format tf_saved_model we get a directory with following structure:

Loading the model

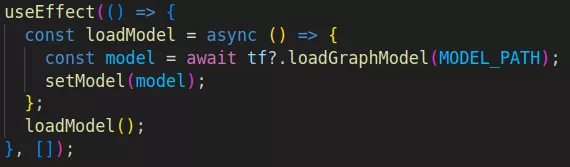

TensorFlow.js has a method loadGraphModel which accepts an URL that is serving a model to loaded. As this app is built on top of NextJS, the "translated" model was placed under public directory, and due to that the model importing is done like below image:

That way, once the app is rendered on browser the model is loaded and get available to perform an inference on a given image.

Preprocessing a given image before running inference

The model was be trained using a 28x28 grayscale image. The majority of images that will be inserted into the model will probably be different than that, for instance, it could be a colorful image, it could have shape 173x100 etc.

Moreover, the MNIST dataset has a peculiar way to represent images: the "effective image area" representing the number per se has values in the range of 0-255, while the "empty areas" are considered 0. One concrete example of number 3 can be seen below:

Real world images are represented using a different approach in which the background is typically lighter than the foreground. Hence, we need to invert the pixel values.

Due to that, we need to preprocess the given images before running the model inference. This process must involve: (1) converting image in grayscale (to ensure that the input image has a single channel);(2) inverting the pixels (to ensure that foreground is lighter than background like the images we will use to train the model);(3) normalizing the image (apply element wise the division by 255);(4) resizing the image to be 28x28 (the shape that the model will use);

Such process performed against an image (X, Y, W), where X is the width, Y the height and W the channels, should return an image with shape (28, 28, 1).

And one last step before feeding the image into the model is creating an input tensor having shape (1, 28, 28, 1), which means a tensor with one element (the image) with 28 pixels of width, 28 pixels of height and 1 channel.

Using the app

If you want to just use the app you can visit it snapmath. In case you want to run the app locally just clone the project and follow the instructions to run. The repo also provides a version using Python and Flask and instructions are also available.